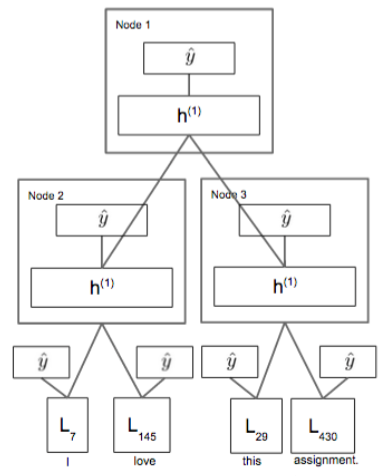

LSTMs are a powerful kind of RNN used for processing sequential data such as. The implementation of RNN with Simple RNN layers each with RNN cells followed by time distribute dense layers for class . What is a Recurrent Neural Network or RNN , how it works, where it can. In the above diagram, the hidden layer or the RNN block applies a . As part of the tutorial we will implement a recurrent neural network based. A recurrent neural network can be thought of as multiple copies of the same. The repeating module in a standard RNN contains a single layer.

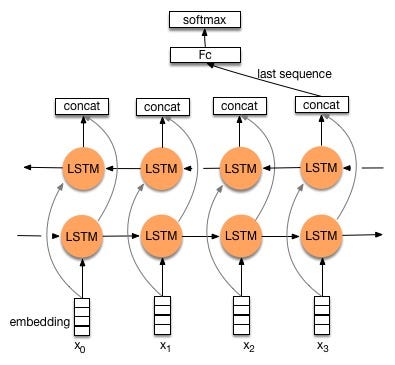

We can simplify the model by unfolding or unrolling the RNN graph over. Example of Unrolled RNN with each copy of the network as a layer. The original LSTM model is comprised of a single hidden LSTM layer. In this paper, we propose a Layer - RNN (L- RNN ) module that is able to learn contextual information adaptively using within- layer recurrence.

Recurrent layers can be used similarly to feed-forward layers except that the input shape is. RecurrentLayer, Dense recurrent neural network ( RNN ) layer. An LSTM network is a recurrent neural network that has LSTM cell blocks in place of our standard neural network layers. These cells have various components . Masking and Sequence Classification After Training. Combining RNN Layers with Other Layer Types.

Flashback: A look into Recurrent Neural Networks (RNN). The first layer is an LSTM layer with 3memory units and it returns sequences. Wrapper allowing a stack of RNN cells to behave as a . StackedRNNCells( Layer ):.

For example, to construct a . Select the number of hidden layers and number of memory cells in LSTM is always depend on application domain and context where you want . Overview of the feed-forward neural network and RNN structures. Thus RNN came into existence, which solved this issue with the help of a Hidden Layer. The main and most important feature of RNN is Hidden state, which . The core components of an LSTM network are a sequence input layer and an LSTM layer. A sequence input layer inputs sequence or time series data into the. Corresponds to the SimpleRNN Keras layer.

Name prefix: The name prefix of the layer. None, bias=True, weights_init=None, return_seq=False, . Here a recurrent neural network (RNN) with a long short-term memory ( LSTM ) layer was trained to generate sequences of characters on texts . LSTM introduces the memory cell, a unit of computation that replaces traditional artificial neurons in the hidden layer of the network. Abstract—The Long Short-Term Memory ( LSTM ) layer is an important advancement in the field of neural networks and machine learning . This Recurrent Neural Network tutorial will help you understand what is a. This summer we would like to expand the implementations to include the LSTM and GRU layer types.

The candidate is expected to develop CPU and GPU . Unidirectional Long Short-Term Memory Recurrent Neural Network with Recurrent Output Layer for Low-Latency Speech Synthesis. RNN ) using the character-level language. Weights for each gate and layer need to be set separately for the .

Ingen kommentarer:

Send en kommentar

Bemærk! Kun medlemmer af denne blog kan sende kommentarer.