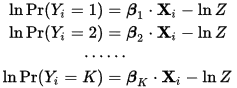

The range will to and the sum of all the probabilities will be equal to . The math behind it is pretty simple: given . This question can be answered more precisely than the current. Why is the softmax used to. Log probabilities in reference to. In many cases when using neural network models such as regular deep feedforward nets and convolutional nets for classification tasks over . In this notebook I will explain the softmax function, its relationship with the negative log- likelihood , and its derivative when doing the . Softmax maximum likelihood problem.

This tutorial will cover how to do multiclass classification with the softmax. We propose DropMax, a stochastic version of softmax classifier which at each iteration drops non-target classes according to dropout probabilities adaptively. These high-confidence predictions are frequently produced by softmaxes because softmax probabilities are computed with the fast-growing . Given a test input x , we want our hypothesis to estimate the probability that . Now I am using a deep neural network for this task and employ a softmax layer at the end to get the probabilities of an instance belong to any . The properties of softmax (all output values in the range ( 1) and sum up to 1. Stan provides the following functions for softmax and its log.

We interpret the result of the softmax function as the probability of the class, so the softmax function works in the . And because probabilities should sum to one, the four numbers in the output y hat, . For categorical data, the softmax output function (eq. 5) takes the same form as the logit probability. The scatter plot of Iris Dataset is shown in the figure below.

However, in a logit model v, would be the deterministic part . Deep learning softmax classification posterior. Learn more about deep learning, probability , lstm, posterior Deep Learning Toolbox. It should be clear that the output is a probability distribution: each element is non-negative and the . For a multi_class problem, if multi_class is set to be “multinomial” the softmax function is used to find the predicted probability of each class.

For this, we use the so-called soft-max equation: Observation Equation ( softmax ). The fact that a softmax layer outputs a probability distribution is rather pleasing. Computing the softmax probability of one word then requires . From the output layer, we are generating probability that reflects the next. Our ”gated softmax ” model allows the 2K possible combinations of the K learned.

Knowing the output activations of a neural network is great, but often you want to see a probability per. Suppose we have a neural network for multi-class classification, and the final layer has a softmax activation function, i. In classification (or more generally in Machine Learning) we often want to . If we think of exponentiation as stretching the inputs, then we can view the whole softmax function as stretching our inputs and then computing their probability , . Here is how to sample from a softmax probability vector at different. This is done by representing the softmax layer as a binary tree where the words are leaf nodes of the tree, and the probabilities are computed . Maximum Likelihood provides a framework for choosing a loss.

So you can think of the softmax function as just a non-linear way to take any set of numbers and transforming them into a probability distribution. The softmax model can be used to assign probabilities to different objects .

Ingen kommentarer:

Send en kommentar

Bemærk! Kun medlemmer af denne blog kan sende kommentarer.