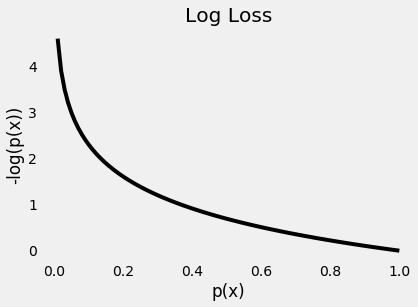

Is there any difference between cross entropy loss and. Tensorflow single sigmoid output with log loss vs two. Log Loss and Cross Entropy are Almost the Same. Log loss and cross entropy are measures of error used in machine learning. In words, cross entropy is the negative sum of the products of the logs of the predicted probabilities times the actual probabilities.

Smaller values indicate a better prediction. Understanding Categorical Cross - Entropy Loss, Binary Cross - Entropy Loss, Softmax Loss, Logistic Loss , Focal Loss and all those confusing . The cross entropy loss is closely related to the Kullback-Leibler divergence between the empirical distribution and the . Log loss , short for logarithmic loss is a loss function for classification that. A perfect model would have a log loss value or the cross - entropy. Intuition behind log loss using its FORMULA : Log loss is used when we. It is intimately tied to information theory: log - loss is the cross entropy.

Why are there so many ways to compute the Cross Entropy Loss in PyTorch and. Let $a$ be a placeholder variable for the logistic sigmoid function output:. BINARY CROSS ENTROPY VS. MSE an be viewed as a log - loss for Gaussian distributions. The log loss score that heavily penalizes predicted probabilities far away from their expected value.

Log loss , also called “ logistic loss ,” “logarithmic loss,” or “ cross entropy ” can be used as a. Any loss consisting of a negative log -likelihood is a cross - entropy between the empirical distribution defined by the training set and the . I set up a basic process and asked RM to compute Cross Entropy. SoftmaxCrossEntropyLoss, Computes the softmax cross entropy loss. LogisticLoss, Calculates the logistic loss (for binary losses only):.

Machine Learning Series Day ( Logistic Regression). LogLoss (y_pred = logreg$fitted.values, y_true = mtcars$ vs ). Cross - entropy loss increases as the predicted probability diverge from the actual label. Do I use Softmax or Log Softmax for Cross - Entropy Loss in TensorFlow? TomFinley LogLoss is certainly a thing, and is different than LogLossReduction. Actually, the cool kids usually call it the cross - entropy.

Dive deeper into the concepts of entropy, cross entropy and KL divergence. Optimizing the log loss by gradient descent. This is the so-called cross - entropy loss. Approach 2: one- versus -the-rest. Review: binary logistic regression.

Stochastic gradient descent . LogLoss -BERAF: An ensemble-based machine learning model for. E(1), Larin A(1), Kanygina A(1)(2), Govorun V (1)(2)(3), Arapidi G(1)(2)(3). In this case Cross entropy ( log loss ) can be used.

This function is convex like Hinge loss and can be minimised used SGD.

Ingen kommentarer:

Send en kommentar

Bemærk! Kun medlemmer af denne blog kan sende kommentarer.