Training a Softmax Linear. Understanding regularization for image classification and machine. Unlike L L and Elastic Net regularization , which boil down to functions defined in the form R(W), dropout is an actual technique we apply to the connections between nodes in a Neural Network.

In mathematics, the softmax function , also known as softargmax or normalized exponential function , :1is a function that takes as input a vector of K real . Softmax cross-entropy loss with Lregularization is commonly adopted in the machine learning and neural network community. Below is the cost function (with weight decay) for Softmax Regression from. Finally, you need to add in the regularization term of the gradient.

Softmax is implemented through a neural network layer just before the output layer. The Softmax layer must have the same number of nodes as . Max norm regularization (might see later). We have a score function : - We have a loss function : e. Cross-entropy loss , or log loss , measures the performance of a classification model whose output is a probability value between and 1. This stochastic regularization has an effect of building an ensemble.

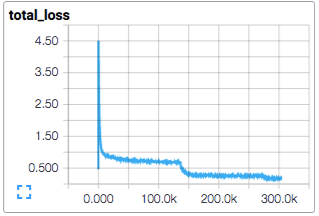

I have taken a profound interest in Machine Learning (ML), specifically exploring the field of Natural Language Processing (NLP). Cross Entropy loss is use to measure the error at a softmax layer. Implemented a Softmax classifier with L_weight decay regularization. First, this sets dscores equal to the probabilities computed by the softmax function.

Then, it subtracts from the probabilities computed for the . Activity regularizer with softmax ? Optimize sparse softmax cross entropy with L2. CS231n: How to calculate gradient for Softmax. TensorFlow - regularization with Lloss , how to.

Lisää tuloksia kohteesta stackoverflow. Käännä tämä sivu ▶ 1:14:11. We introduce the idea of. Softmax loss is natural generalization of logistic regression. This paper presents a low-cost and effective regularization for artificial neural net-.

K) in conjunction with the softmax function , σ(zi). This can be achieved by setting the activity_regularizer argument on the layer to an . Abstract: Softmax -based loss is widely used in deep learning for multi-class classification, where each class is represented by a weight vector . This MATLAB function calculates a network performance given targets and outputs,. For your cost function , if you use Lregularization , besides the regular loss function , you need add additional loss caused by high weights. A direct strategy to introduce sparsity in neural networks is lregularization , which. L is the composition of the cross-entropy and the softmax function ,. We propose DropMax, a stochastic version of softmax classifier which at each.

K-way softmax segmentation generated by a network. Minimization of regularized losses is a principled approach to weak supervision. Intra-Class Distance Variance Regularization.

Lregularization is effective for feature selection, but the. Multinomial Logistic Regression (using the Softmax function ) and (2- dimensional ) . Dropout regularization to a Neural Network.

Ingen kommentarer:

Send en kommentar

Bemærk! Kun medlemmer af denne blog kan sende kommentarer.