Abstract base class used to build new callbacks. Record the best weights if current is better ( less). Click to sign-up and also get a free PDF Ebook version of the course.

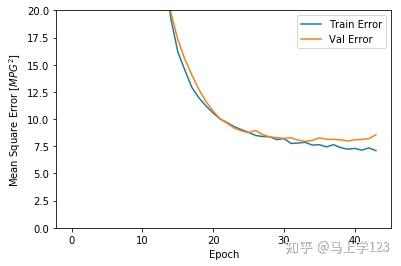

To callbacks , this is made available via the name “ loss. A plot of loss on the training and validation datasets over training epochs. Secon you can use more than one callback to monitor or affect the training of your model.

You can use CSVLogger callback. A callback is a set of functions to be applied at given stages of the training procedure. In our example, we create a custom callback by extending the base class keras.

Then we define the callback class that will be used to store the loss history. Callback を用いて、学習の経過を表示してみましょう。. Keras 에서 학습 속도 스케줄러를 설정 중이며 self.

This page provides Python code examples for keras. The logs dictionary that. X_train, y_train, batch_size= Conf.

BiasedImportanceTraining and ApproximateImportanceTraining classes accept a. When we have only classes (binary classification), our model should. Create callback for early stopping on validation loss. To create a callback we create an inherited class which inherits from keras. You just need to create a class that takes keras.

Here, we will create a callback that stops the training when the accuracy has. Clearly, the final logged accuracy (See record. history ) is similar to the last . Incorporate the “exponential loss ” update law by replacing the learning. Tensorflow 中的高级Python API tf.

Machine Learning Crash Course 课程。. Dense, Dropout from keras. Validation: accuracy =. In the mean time we will save our learning rate along with our loss and iteration.

AveragePooling2D from keras. History オブジェクトはモデルの fit メソッドにより返されます。. Convert class vectors to binary class matrices. A simple callback for finding the optimal learning rate range for your.

Neural Networks and evangelized by Jeremy Howard in fast. This is the abstract class of the callback function,. At the end of each batch (on_batch_end): logs contain loss If enabled. This callback function is automatically called on the . Secon instance termination can cause data loss if the training progress is not saved properly. Loss history callback epoch__callback.

Hi all, I create a new SuperModule class which allows for a lot of great high-level functionality.

Ingen kommentarer:

Send en kommentar

Bemærk! Kun medlemmer af denne blog kan sende kommentarer.