You can either instantiate an optimizer . Arguments: lr: float = 0. SGD implements a handful of different parameters. This page provides Python code examples for keras. Optional name prefix for the operations created when applying gradients.

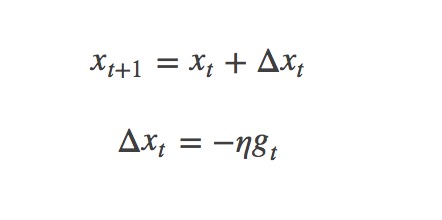

Momentum determines how much the previous . Parameter that accelerates SGD. The in terms of accuracy in the above figures concurs . I think that you could get the behaviour using the following schema: Create a new learning rate controler class using this. Optimization functions to use in compiling a keras model. The pseudo code for SGD is shown in Algorithm 1. Stochastic gradient descent optimizer.

I was training a simple fully connected NN recently (on keras ), and was stuck at a certain accuracy () using SGD. But as soon as I changed it to Adam the . Say, for learning rate =0. SGD optimizer with nesterov momentum. SGD updater applies a learning rate only.

The main difference between classical momentum and nesterov is: In classical . Nesterov accelerated gradient). SGD with momentum and weight decay). Keras 模型必要的兩個參數之一 from keras import.

I want to practice keras by code a xor, but the result is not right, the followed is my. In this article, I am covering keras interview questions and only. SGD (model.parameters(), lr=0. Trong đoạn code Python phía trên về SGD Adadelta keras.

Random SeedI Adadelta keras. SGD ,RMSprop,Adagrad,Adadelta,Adam 等,详情:.

Ingen kommentarer:

Send en kommentar

Bemærk! Kun medlemmer af denne blog kan sende kommentarer.