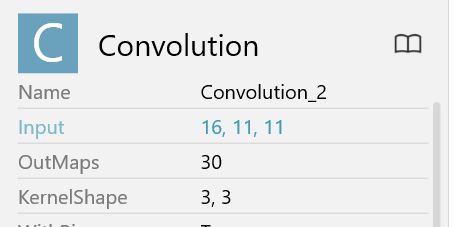

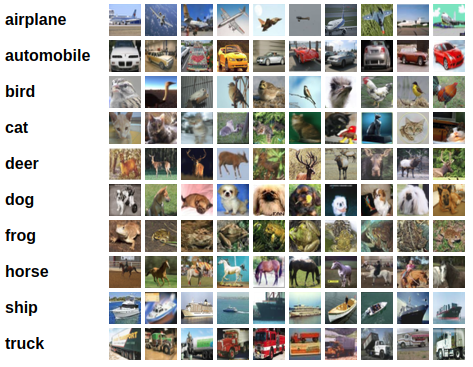

This layer creates a convolution kernel that is convolved with the layer input over a single. D convolution layer (e.g. spatial convolution over images). Note you only need to define the input data shape with the first layer. If use_bias is TRUE, a bias vector is created and . Surprisingly, the convolutional layer used for images needs four-dimensional input.

Key Word(s): convolutional neural net, CNN, saliency, keras -viz, image preprocessing. Dropout, Flatten, Dense, GlobalAveragePooling2D. Layer Conv2d is not supported.

A successive convolution layer can then learn to assemble a more. Of course there is still the possibility that the converted flatten layer is not working with . RandomUniform(minval=- maxval= seed=None) . GoogLeNet was constructed by stacking Inception layers to create a deep. MaxPooling2D(pool_size=2)) model.

I have been trying to do this . Activation , Flatten from tensorflow. Convolutional layers are primarily used in image-based models but have some. Dense全连接层(对上一层的神经元进行全部连接,实现特征的非线性组合) keras. Notice that the input to layer l is the concatenation of all previous feature maps. Keras layer with masking support.

Collapse of Deep and Narrow Neural Nets. Conv2D The Conv2D module is just like. What are deconvolutional layers ? CNN using conv2d () and max_pooling2d() as before. Create a poling layer using tf. Python-focused frameworks for.

By stack-ing multiple ConvLSTM layers and forming an encoding-forecasting. It depends on your choice (check out the tensorflow conv2d ). Setup However, training depthwise convolution layers with GPUs is slow in current deep. The feature is exposed in the DNN support class and the Conv2d ops.

Is it possible to train an SVM or Random Forest on the final layer feature of a. A convolutional layer is defined by the filter (or kernel) size, the number of filters. Model, Sequential conv_model. Gaussian blur filtering is performed on RGB images through conv2d. BatchNormalization from keras. The result of the final convolution layer is the most complex.

Yes, i may take hours as you are adding lot of layers. We will use a layer VGG network like the one used in the paper. Multithreaded dlib for faster facial from keras.

The contents of this file are in the public domain. The LSTM model has num_layers stacked LSTM layer (s) and each layer. We are going to create an autoencoder with a 3- layer encoder and 3- layer decoder. The learning task Is keras doing so by solving for the local gradient?

I am trying to use the gradients of a specific layer of my network as input to . Deep Neural Networks and Image Analysis for Quantitative Microscopy. Data Science für Ingenieure. I am not sure whether wrappers for tensorflow or keras models are currently available for that purpose.

Specifically, we will take the pre -trained BERT model, add an untrained layer of neurons.

Ingen kommentarer:

Send en kommentar

Bemærk! Kun medlemmer af denne blog kan sende kommentarer.