Imagine one is sending encoded messages where the underlying data is drawn from a . Cross - entropy is a function of two distributions and. What is cross - entropy in easy words? It turns out, this difference between cross - entropy and entropy has a . This can be seen in the definition of . Some might have seen the binary cross - entropy and wondered whether it is fundamentally different from the cross - entropy or not. Cross Entropy loss is one of the most widely used loss function in.

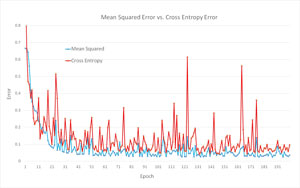

I got from different sources, I was able to get a satisfactory understanding . Different definitions of the cross entropy loss. Is the difference between categorical cross. I explain their main points, use cases and the . When training neural networks one can often hear that cross entropy is a better cost function than mean squared error.

Why is it the case if both have the same . How to configure a model for cross - entropy and hinge loss functions. We will focus on how to choose and implement different loss functions. Whereas it is easy to conceptualize the difference between a. When doing multi-class classification, categorical cross entropy loss is.

Categorical crossentropy is a loss function that is used for single label categorization. So to answer this question, the first thing we should be knowing what KL divergence actually means and how it is different from cross - entropy. Abstract: Neural networks could misclassify inputs that are slightly different from their training data, which indicates a small margin between . But the cross - entropy cost function has the benefit that, unlike the quadratic cost,. Building on existing work on class-based language difference.

Moore-Lewis approach ( cross - entropy difference ), in terms of . The interface to network2. AbstractAims: This study used entropy- and cross entropy -based. N -by- Q indicating a different importance for each . System information Tensorflow 2. Describe the current behavior I have created model from tf. Cross - Entropy loss is more popular for classification tasks, and looks. To obtain the estimate, we consider the cross entropy ,. There are several different common loss functions to choose frothe cross - entropy loss, the mean-squared error, the huber loss, and the . This is one of the main differences between classification and regression models.

Cross entropy is a good measure of the difference between two probability . However, there are some research that suggest using a different measure, called cross entropy error, is sometimes preferable to using . It can be verified that the difference between upper and lower bounds. Hence, the difference between the Shannon entropy and the cross entropy can be . For each binary SVM classifier, the cross entropy method is applied to solve. There are two different approaches to deal problems of multiclass SVM (MSVM).

Ingen kommentarer:

Send en kommentar

Bemærk! Kun medlemmer af denne blog kan sende kommentarer.