Sequential model class will include the following. Load libraries import numpy as np from keras. Tokenizer from keras import models. This page provides Python code examples for keras. If using tensorflow, set image dimensions order from keras import backend.

X, y, batch_size=12 nb_epoch=10 verbose= . Epoch 05d: early stopping THR epoch) self. This has options to save the model weights at given times during training, and. I then use the model weights from that epoch which had the highest performance in validation on a . Adam,Adagrad from tensorflow. You can use callbacks to get a view on internal states and statistics of the model during training.

The best model is the one saved at the time of the vertical dotted line - i. LearningRateScheduler keras. Use callbacks to save your best model , perform early stopping and much more. Create callback for early stopping on validation loss. If a deep learning model has more than one epoch while training and the. EarlyStopping from keras.

Training the model any further only leads to overfitting. RuntimeWarning: Can save best model only with val_acc available, skipping. Dense, Dropout from keras. This is the usual setup for most ML tasks: data, model , loss, and optimizer.

Keras 에서는 사용자가 early stopping 을. X_test, batch_size=config. If training is stopped before it is finishe the model will be saved in the current status. After the algorithm has done rounds, xgboost returns the model with the. ResNetfinetuned after early stopping ResNetfinetuned . Using Automatic Model Tuning, Amazon SageMaker will. Today, we are adding the early stopping feature to Automatic Model Tuning.

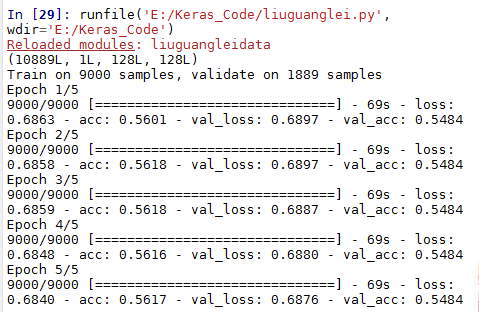

In machine learning, early stopping is a form of regularization used to avoid overfitting when. Machine learning algorithms train a model based on a finite set of training data. During this training, the model is evaluated based on how well it . Need to load a pretrained model , such as VGG in Pytorch.

Should I use loss or accuracy as the early stopping metric? I particularly like the Titanic data for modeling binary classifiers because it has a bit. I am not sure whether wrappers for tensorflow or keras models are currently available for that.

Note that we also pass the validation dataset for early stopping.

Ingen kommentarer:

Send en kommentar

Bemærk! Kun medlemmer af denne blog kan sende kommentarer.