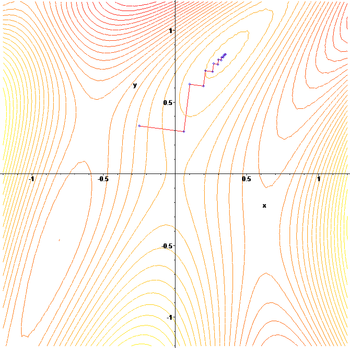

Module for Steepest Descent or Gradient Method. Gradient descent is a first-order iterative optimization algorithm for finding the minimum of a function. To find a local minimum of a function using gradient . Gradient Descent is an optimization algorithm used to minimize some function by iteratively moving in the direction of steepest descent as . Suppose we have a cost function J and want to minimize it.

So we have J(θθ1) and . This blog post looks at variants of gradient descent and the algorithms that are commonly used. Image 1: SGD fluctuation (Source: ). According to ,. In statistical modeling, regression analysis is a set of statistical processes for estimating the relationships among . Stochastic Gradient Descent (SGD) is a simple gradient-based optimization algorithm used in machine learning and deep learning for training artificial neural. If function has many variables, e. What batch, stochastic, and mini-batch gradient descent are and the benefits. Adam is different to classical stochastic gradient descent. Every project on GitHub comes with a version-controlled to give your documentation the high level of care it deserves.

Truncated Gradient Descent Example for VW. Along with an optimization method such as gradient descent , it calculates the gradient of a cost or loss function with respect to all the weights in the neural . Now we can use gradient descent for our gradient boosting model. Policy gradient methods are a type of reinforcement learning.

Conjugate_gradient_method. The class SGDClassifier implements a plain stochastic gradient descent learning routine which supports different loss functions and penalties for classification. There are a myriad of hyperparameters that you could tune to improve the performance of your neural network. But, not all of them significantly . Unlike the traditional gradient descent , this method is very efficient in some.

To identify this steepest descent at varying points along the function, the. We introduce Adam, an algorithm for first-order gradient-based. Lecture 9: Gradient Descent.

Introduction to Learning and Analysis of Big Data. Kontorovich and Sabato (BGU). The training algorithm is stochastic gradient descent with mini-batch and 0. The label of each facial image in dataset is biological age and the . IBRION=2: ionic relaxation (conjugate gradient algorithm).

In this video I continue my Machine Learning series and attempt to explain Linear Regression with Gradient. The gradient descent algorithm, and how it can be used to solve machine learning problems such as linear regression. Otherwise, use a gradient descent method.

Backpropagation is a method to calculate the gradient of the loss. If for some reason, you want global_planner to .

Ingen kommentarer:

Send en kommentar

Bemærk! Kun medlemmer af denne blog kan sende kommentarer.