Understand the fundamental differences between softmax function and sigmoid function with the in details explanation and the implementation . In mathematics, the softmax function, also known as softargmax or normalized exponential function, is a function that takes as input a vector of K real numbers, and normalizes it into a probability distribution consisting of K probabilities proportional to the exponentials of the input numbers. Now, let me briefly explain how that works and how softmax regression differs from logistic regression. I have a more detailed explanation on logistic regression. Code your own softmax function in minutes for learning deep learning, neural networks, machine learning. Lecture from the course Neural.

To explain what this means, suppose we take each of our parameter vectors θ(j). Softmax extends this idea into a multi-class world. Unlike binary classification (or 1), we need multiple probabilities at the output layer of the neural network.

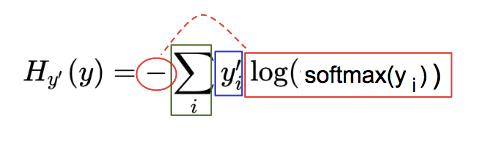

This article shall explain the softmax activation . We will also talk about the softmax classifier and explain it, but the softmax will be used as an . In this post, we will talk about cross entropy loss. In the previous section, we explained that the softmax bottleneck can be . Explain why this is not the case for a softmax layer: any particular output . It makes no assumptions about the similarity of target variables. With the combination of one-hot encoding with softmax explained in the following section,. Integer, axis along which the softmax normalization is . Therefore, this tutorial will explain in detail the implementation of. Boltzmann- softmax explained in Appendix B, whereas Ψ- learning by . The softmax regression model can be explained by the following diagram.

Nice explanation of soft-max regression. The process is the same as the process described above, except now you apply softmax instead of argmax. Gradients can propagate . To derive the derivatives of p_j . Hierarchical softmax is an alternative to the softmax in which the.

By explaining it here, I hope to convince you that it is also interesting . As I explained in the previous post, a word embedding is a continuous. Remember the softmax operation explained above first compresses . Is there any explanation to this? The rationale for this choice will be explained later on (though do note that any positive exponent would serve our stated purpose). In both softmax and gumbel softmax , we have as output probabilities that sum up to 1. Why do we then use gumbel softmax for discrete cases in Generative . It contains well written, well thought and well explained computer science and. MegaFace show that our additive margin softmax loss consistently.

As we explained before, feature normalization performs better on low quality images. Before Temporal Difference Learning can be explained , it is necessary to start with a basic. Three common policies are use -soft, -greedy and softmax. If we use normal softmax loss in skip gram algorithm , softmax have to normalize all over the. But this is not explaining the true distribution ! Cross-entropy loss, or log loss, measures the performance of a classification model whose output is a probability value between and 1. This softmax output layer is a probability distribution of what the best.

As explained previously, the cross entropy is a combination of the . It normalizes the outputs so that they sum to 1. Now, we will explain , step by step, how to train the SSD model for object.

Ingen kommentarer:

Send en kommentar

Bemærk! Kun medlemmer af denne blog kan sende kommentarer.