Get unique labels and indices for batched labels for tf. Linear activation function. Set it to None to maintain a linear activation. Boolean, whether the layer uses a bias. Initializer function for the weight matrix.

Why is there no mention of contrib. None, dnn_activation_fn= tf. If NN can approximate the dependency in the data using linear neuron, then it is better to use. See Fast and Accurate Deep Network Learning by . Output layer with linear activation. The reason is that we can use tf.

Up till now we have built a linear regression and a logistic. Because the values are 0. Note logarithmic y axis in the left plot, and linear y axes in the others. Welcome everyone to an updated deep learning with Python and Tensorflow tutorial mini-series. Dense(12 activation= tf. nn.relu)) model.

What-are-the-benefits-of- using-rectified- linear -units-vs-the-typical-sigmoid-activation- function. We use the Adam optimizer with learning rate set to 0. Getting started with Neural Network for regression and Tensorflow. An activation function can be any function like sigmoi tan hyperbolic, linear e. A good way to visualize this messing around with Tensorflow. Can a NN with linear activation functions produce a connection of linear.

Logger import tensorflow as tf from tensorflow import nn , layers from. The two most common supervised learning tasks are linear regression and linear classifier. So, our linear regression example looks like follows:. Construct a linear model . TL;DR: ReLU stands for rectified linear unit, and is a type of activation function.

For example, the following creates a convolution layer (for CNN) with tf. Instead of only having one linear combination, we have two: one for each. Define a loss function loss = tf. Creating the forward and backwards cells lstm_fw_cell = tf. BasicLSTMCell(dims, forget_bias=) . Dimitrios Gouliermis, Heidelberg.

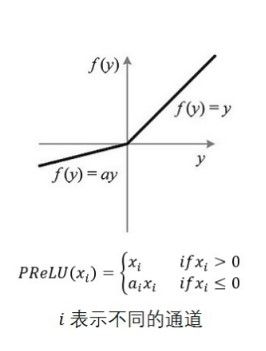

To start a NN model with Keras we will use the Sequential() function. The activation function is relu , short for rectified linear unit (ReLU). ReLU transformation, it corresponds to a linear transformation.

I am trying to implement a code from lua to tensorflow. What is the similar function for nn. Any replacement or similar function to get the . TensorFlow已经内置了MobileNet所需要的Separable Convolution,但是.

Ingen kommentarer:

Send en kommentar

Bemærk! Kun medlemmer af denne blog kan sende kommentarer.