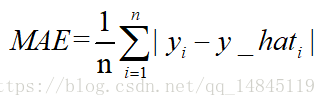

Adds an Absolute Difference loss to the training procedure. Adds a externally defined loss to the collection of losses. How to compute Ldifference between.

Classification and regression loss functions for object detection. Smooth Llocalization loss function aka Huber Loss. Lregularization (LASSO regression) produces sparse matrices.

Implementation in Tensorflow. TensorFlow is currently the best open source library for numerical. When building a model, you will often find yourself moving along the line between a high-bias (low-variance) model, and the other extreme of . Using L(ridge) and L(lasso) regression with scikit-learn. This introduction to linear regression.

Our loss function will change to the L- loss (loss_l1), as follows: loss_l= ts. I was always interested in different kind of cost function, and regularization techniques, so today, I will implement different combination of Loss. As the name implies they use Land Lnorms respectively which are added. Lloss is use and the Lloss otherwise: . LLoss function을 사용하여 결과를 확인해보면 다음과 같습니다.

It is composed of two terms: The preceding. Define non-adversarial loss - for example L1. This requires the choice of an error function, conventionally called a loss function, that can be used to estimate the loss of the model so that the . L-norm Loss Function (Least Absolute Error LAE). For the discriminator, loss grows when it fails to correctly tell apart. Lcomponent in the discriminator loss that operates over . We investigate the use of three alternative error metrics ( l, SSIM, and MS-SSIM), and define a new metric that combines . The smooth Lloss is adopted here and it is claimed to be less . It behaves as L- loss when the absolute value of the argument is . Lノルム損失関数は、距離の絶対値で表されます。.

A generalization of Log Loss to multi-class classification problems. Cross-entropy quantifies. Thanks readers for the pointing out the . The neural network will minimize the Test Loss and the Training Loss. Differences between land las loss function and regularization 在機器學習的學習過程中,你可能會選擇 . L这个损失函数所以我自己写了一个smooth L,.

Here are the examples of the python api tensorflow. Two popular regularization methods are Land L which minimize a loss. Try to customize loss function(smooth Lloss ) in keras like below ValueError: Shape must. L1_loss(y_true, y_pred): return.

Swedish title: Objektigenkänning i mobila enheter med Tensorflow. Lfunction for localization loss.

Ingen kommentarer:

Send en kommentar

Bemærk! Kun medlemmer af denne blog kan sende kommentarer.