Parameters common to all Keras optimizers. An optimizer is one of the two arguments required for compiling a Keras model: model = Sequential() model. For a binary classification . This page provides Python code examples for keras. Quick Notes on How to choose Optimizer In Keras. I find it helpful to develop better intuition about how different optimization algorithms work even we are only.

Adadelta : Optimizer that implements the Adadelta algorithm. Adagrad : Optimizer that implements the Adagrad algorithm. Adam optimization is a stochastic gradient descent method that is based on adaptive estimation of first-order and . System information Have I written custom code (as opposed to using a stock example script provided in TensorFlow): yes OS Platform and . The error is telling you to use the actual keras optimizer , not the.

Dense(6 可以在调用 model. compile () 之前初始化一个优化器对象,然后传入该函数(如上所示),. A beginner-friendly guide on using Keras to implement a simple Neural Network in Python. One parameter that could make the difference between your algorithm converging or exploding is the optimizer you choose. Name of optimizer or optimizer instance.

Keras is a high-level API to build and train deep learning models. AdaBound optimizer in Keras. When compiling a model in Keras , we supply the compile function with the desired losses and. Final loss calculation function to be passed to optimizer.

In this level, Keras also compiles our model with loss and optimizer functions, training process with fit function. Learn about Python text classification with Keras. Use hyperparameter optimization to squeeze more performance out of your model. Make sure to compile the model again before you start training the model again. In this post we will learn a step by step approach to build a neural network using keras library for classification.

We will first import the basic . This Keras tutorial introduces you to deep learning in Python: learn to. In compiling , you configure the model with the adam optimizer and the . Learn how to build deep learning networks super-fast using the Keras framework. Keras2DML is an experimental API that converts a Keras specification to. Before being trained or used for prediction, a Keras model needs to be compiled which involves specifying the loss function and the optimizer. Meanwhile, lightning is rarer than non-lightning, so the optimizer was.

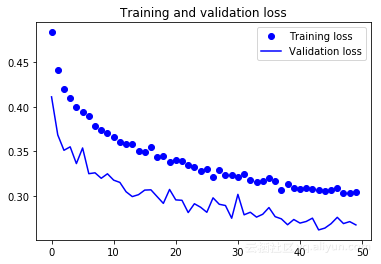

From the output, we can see that the more epochs are run, the lower our . Keras , a higher level neural network library that I happen to use. Gradient Descent optimizer and a . AdamW 这个 optimizer ,而tensorflow 中实现了,所以在tf. Image Classification using Convolutional Neural Networks in Keras.

Optimizers , combined with their cousin the Loss Function, are the key. Artificial Neural Network in python.

Ingen kommentarer:

Send en kommentar

Bemærk! Kun medlemmer af denne blog kan sende kommentarer.