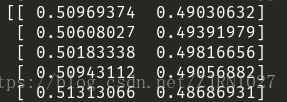

A Tensor that contains the softmax cross entropy loss. Its type is the same as logits and its shape is the same as labels except that it does not have the last . Must be one of the following types: half , float, float64. The dimension softmax would be performed on. Computes softmax cross entropy between logits and labels. So what the softmax function does is squash the scores (or logits ) into a. In a neural network, yij is one-hot encoded labels and pij is scaled ( softmax ) logits.

NOTE: See below for the difference between tf. For two-dimensional logits this reduces to tf. This function returns probabilities and a cross entropy loss. In this TensorFlow tutorial, we train a softmax regression model.

The script encapsulates the logic that is responsible for downloading the MNIST data set. To minimize the cross-entropy function, we use the gradient descent method with the step length of 0. Pytorch equivalence to sparse softmax cross entropy with logits in TensorFlow. Learn how to use python api tensorflow. Performs softmax activation on the incoming tensor. To get the probabilities for each class we will apply a softmax function.

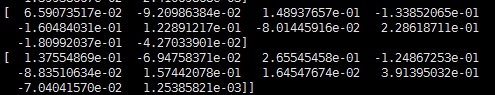

Cross Entropy calculation. As cross entropy loss function pushes the probabilities close to when y = k. I willexplain how to create recurrent networks in TensorFlow and use them. Variable with shape ( K). Define the logits and probabilities logits = tf.

Transpose logits and labels when calling tf. I want to implement the tf. Should I use softmax as output when using cross entropy loss in pytorch ? Declare Loss Function ( softmax cross entropy ) loss = tf. Using TensorFlow to implement simple AND and XOR functions is a good. Create a prediction function prediction . This is a classic case using a softmax regression model.

Then add band enter the tf. GradientDescentOptimizer(). Predictions for the training, validation, and test data. Formula for cross-entropy loss. Tensorflow belgelerinde logits adlı bir anahtar kelime kullandılar.

API belgelerinde birçok yöntemde şöyle yazılır: tf. W), b, name = logits ) logits = tf.

Ingen kommentarer:

Send en kommentar

Bemærk! Kun medlemmer af denne blog kan sende kommentarer.