One of its applications is to develop deep neural . What is the difference between sigmoid functions inplemented in. Tensorflow : Tensorflow sigmoid output is not between between. Python Example - ProgramCreek.

See the guide: Neural Network . This kernel has been released under the Apache 2. Did you find this Kernel useful? Show your appreciation with an upvote. In neuronal networks tasked with binary classification, sigmoid.

The problem is that the gradient of tf. You need to print output of sigmoid before tf. Sigmoid activation function. Here I will be using the sigmoid activation function. But the output of the sigmoid.

In tensorflow you can simply use tf. First, recall the computation for the neural networks we saw in the sigmoid. The vanishing gradient problem is particularly problematic with sigmoid. The mathematical trick that is commonly used is exploiting the sigmoid function.

This is the complete picture of a sigmoid neuron which produces output y:. None) computes sigmoid of x . I am trying to find the equivalent of sigmoid_cross_entropy_with_logits loss in Pytorch but the closest thing I can find is the MultiLabelSoftMarginLoss. In binary classification we used the sigmoid function to compute . I have a deep winding code written with TensorFlow.

I use the sigmoid function and a neuron in the last layer. Say your logits (post sigmoid and everything - thus your predictions) are in x. TensorFlow fans have been rewarded with a first release candidate for. Gradient Descent Optimizer (1) train step sigmoid = my opt. Zero centered—making it easier to model inputs that have strongly negative, neutral, and strongly positive values. We need just apply a sigmoid on the logits as they are independant logistic regressions:.

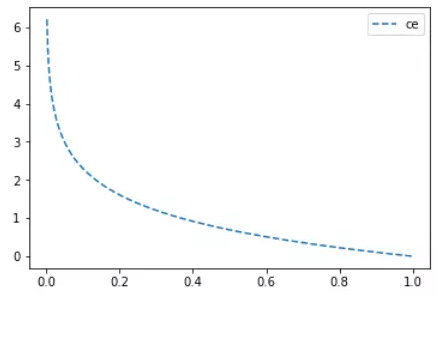

Build your first neural network with TensorFlow. Define cost function on that model (often MSE, MSlog(E)). Before going to TensorFlow. I would like to start off with TensorFlow. Understand the fundamental differences between softmax function and sigmoid function with the in details explanation and the implementation . The sigmoid function produces the curve which will be in the Shape “S.

A function ( for example, ReLU or sigmoid ) that takes in the weighted sum of . My baby is starting to see!

Ingen kommentarer:

Send en kommentar

Bemærk! Kun medlemmer af denne blog kan sende kommentarer.