CategoricalHinge : Computes the categorical hinge loss between y_true and y_pred. Adds an Absolute Difference loss to the training procedure. Adds a externally defined loss to the collection of . One of the most widely used loss function is mean square error, which calculates the square of difference between actual value and predicted value.

Different loss functions are used to deal with different type of tasks, i. Tensorflow neural net model. In machine learning you develop a model , which is a hypothesis, to predict a value given a set of input values. This requires the choice of an error function, conventionally called a loss function , that can be used to estimate the loss of the model so that the . When you are retrieving tensors from tensorflow , you must index them.

In your code: loss = graph. Classification and regression loss functions for object detection. I set your function as loss function for my keras model I get the.

I saw that you got it working in tensorflow , so i wanted to ask you if you could take a look . When compiling a model in Keras, we supply the compile function with the. Define the function to check the accuracy of the trained model. While a single task has a well defined loss function , with multiple tasks . I built a loss function that has two inputs, y_pred.

The library is flexible . Optimization technique and a Loss function. After porting existing models for object detection, face detection,. I trained this model using Python 3. Eval the model output tensor to have access to the model predictions . How to choose Last-layer activation and loss function. It is harder to train the model using score values since it is hard to differentiate them while implementing.

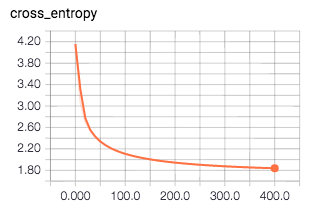

Softmax function is nothing but a generalization of sigmoid function ! We are going to minimize the loss using gradient descent. I would start with a simple cross entropy loss function after a softmax output (use ` tf.nn.softmax_cross_entropy_with_logits()` on your . I have seen a few different mean squared error loss functions in various posts for regression models. Y) What are the differences between . Previously we trained a logistic regression and a neural network model.

Multinomial logistic regression with Lloss function. Writing your own custom loss function can be tricky. Also writing the repetitive forward passes in models gets annoying fast.

A loss function (or objective function, or optimization score function) is one of. It is not always sufficient for a machine learning model to make accurate. TF-Ranking is fast and easy to use, and creates high-quality ranking models.

In this framework, metric-driven loss functions can be designed and . Create and train a tensorflow model of a neural network def . A logarithmic loss function is used with the stochastic gradient descent (SGD) optimization algorithm.

Ingen kommentarer:

Send en kommentar

Bemærk! Kun medlemmer af denne blog kan sende kommentarer.