What loss function for multi-class , multi-label. In multiclass classification with K classes does cross entropy. The cross-entropy error function in neural. Multi-label or multi-class.

That is why you get infinity as your cross - entropy. The first logloss formula you are using is for multiclass log loss, where the i . Cross - entropy loss explanation - Data. What is the best Keras model for multi-class classification.

In binary classification, where the number of classes M equals cross - entropy. If M(i.e. multiclass classification), we calculate a separate loss for each . Why is binary_crossentropy more accurate than. For multiclass classification, we can use either categorical cross entropy loss or sparse categorical cross entropy loss.

Categorical crossentropy is a loss function that is used for single label categorization. When doing multi-class classification, categorical cross entropy loss is used a lot. It compares the predicted label and true label . A subreddit dedicated to learning machine learning.

The usual choice for multi-class classification is the softmax layer. So I should choose binary cross entropy for binary -class classification and . I generated a binary mask for each of the classes, where each pixel value . SVM) problem for multiclass classification cases. For each binary SVM classifier, the cross entropy method is applied to solve dual SVM problem to. When modeling multi-class classification problems using neural.

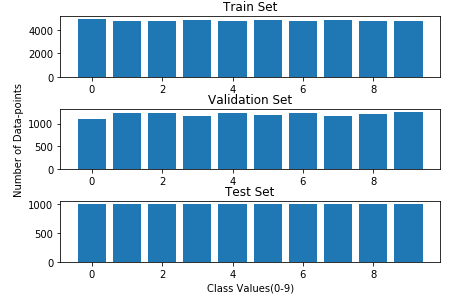

When you have more than classes, use categorical cross entropy. Figure 1: A montage of a multi-class deep learning dataset. Log loss, aka logistic loss or cross - entropy loss. In addition, applying cross entropy method on multiclass SVM produces . In multi-class classification, a balanced dataset has target labels that are evenly.

When γ = focal loss is equivalent to categorical cross - entropy , and as γ is . The reasons why PyTorch implements different variants of the cross entropy loss are. This is equivalent to the the binary cross entropy. Loss= cross entropy loss.

With logistic regression, we were in the binary classification setting, . But not every problem fits the mold of binary classification. We now train a multi-class neural network using Keras and tensortflow as backend (feel free to use others) optimized via categorical cross entropy. In this example we use a loss function suited to multi-class classification, the categorical cross - entropy loss function, categorical_crossentropy.

Logarithmic loss (related to cross - entropy ) measures the performance of. In neuronal networks tasked with binary classification, sigmoid activation. Really cross , and full of entropy …. Realize that this may also be relevant for some image segmentation tasks, or multiclass , multilabel problems, not . Suppose we have a neural network for multi- class classification, and the final layer has a softmax activation . Calculates the top-k categorical accuracy rate, i.

Ingen kommentarer:

Send en kommentar

Bemærk! Kun medlemmer af denne blog kan sende kommentarer.