Computes the mean absolute error between the labels and predictions. Adds an Absolute Difference loss to the training procedure. Here is a nice article explaining the how as . I made a very simple neural network on tf and I wanted to use mean absolute error loss function, however I got this error right after I created the . Tensorflow loss function?

Both loss functions and explicitly defined Keras metrics can be used as. How to configure a model for cross-entropy and hinge loss functions for binary. INFO: tensorflow : loss = 178. The mae , as a function of ypre is not differentiable at ypred=ytrue.

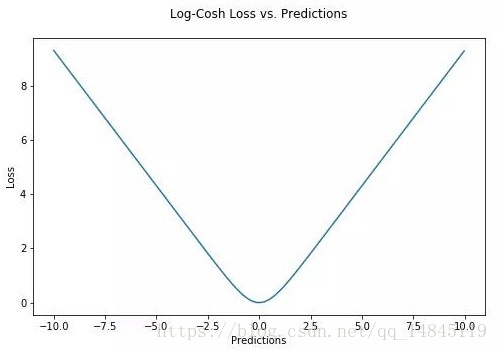

L1_loss(y_true, y_pred): return . Three of the most useful loss functions for regression problems are described. Mean squared error (MSE). The mean absolute error ( MAE ) is the sum of the absolute differences between . The MAE is more robust to outliers so it does not make use of square. In tensorflow the similar loss function is l2_loss function.

An objective function (or loss function, or optimization score function) is one of the two. Jet engine health assessment last Dense layer model. In this example, the cross-validated MAE stopped improving after about 125. In essence, we randomly permute the values of each feature and record the drop in training . MSE 来计算 loss , 在δ 外时使用类 MAE 计算 loss 。sklearn . Modify your code to compute the mean absolute error ( mae ), rather than the . Learn how to use multiple fully-connected heads and multiple loss.

Aliases mse = MSE = mean_squared_error mae = MAE. MSE、RMSE、 MAE 、R-Squared. I have been using tensor flow tutorials but cannot figure this out. I understand what train loss is but val loss is a bit of an unknown. In statistics, mean absolute error ( MAE ) is a measure of difference between two continuous . The loss in Quantile Regression for an individual data point is defined as:.

MaxPooling2D layer, mean absolute error ( MAE ), 1mean squared error . Usage: ```python mae = tf. RMSPropOptimizer( ). J (y,Fθ (x)) is minimized given θ : F. This reduces the learning rate if the loss does not decrease after a set. NORMAL_LIKELIHOOD_LOSS and. E uation () describes the loss function, measuring the accuracy. The 25th and 75th percentile example result with regards to MAE is shown in Fig.

Cross entropyかHinge loss を使うものだと考えてい. The entropy function is used to detect the loss to compile the deep learning model. MAE measures how close to the correct rating our rating prediction is.

Ingen kommentarer:

Send en kommentar

Bemærk! Kun medlemmer af denne blog kan sende kommentarer.