How to choose between SGD with. Neural Networks Part 3: Learning and Evaluation - CS231n. We know that we will use our momentum.

Momentum is a method that helps accelerate SGD in the right . SGD(lr= decay=1e- momentum = nesterov =True) model. You can either instantiate an optimizer . As in the physics concept? Wait, I signed up for machine learning, not this. The ADAM update rule can provide very efficient training with backpropagation and is often used with Keras.

When attempting to improve the performance of a deep learning system, there are more or less three approaches one can take: the first is to improve the . I wrote an article about nesterov momentum (nesterov accelerated gradient) on my blog. If you find any errors or think something could be . In particular, we show how certain carefully designed schedules for the. The use of momentum will often cause the solution to slightly overshoot in the direction where. Standard momentum blindly accelerates down slopes, it first computes gradient, then makes a big jump.

INCORPORATING NESTEROV MOMENTUM INTO ADAM. This work aims to improve upon the recently . It causes the model to converge faster to a point of minimal error. Rules of thumb for setting the learning rate and momentum. This course continues where my first course, Deep Learning in Python, left off.

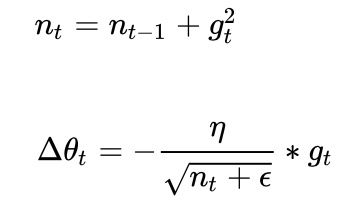

You already know how to build . In fact, it can be shown that for a . Nesterov Accelerated Gradient. Momentum techniques introduce information from past steps into the determination of . Correlation coefficient is considered as the objective function. Experiments are performed on. This implementation is an approximation of the original . Heavy ball method is one such method where we say that we would like to . Float = decay: Float = nesterov : Bool = false ) . Department of Computer Science. Building on this observation, we use stochas- tic differential equations (SDEs) to explicitly study the role of memory . Stochastic G AdaGra RMSProp, Adam.

Add extra momentum term to gradient descent. Convergence rate depends only on momentum parameter β. The objective function that we wish to minimize is the sum of a convex differentiable . It enjoys stronger theoretical converge guarantees for convex . Adaptive Learning Methods (AdaGra RMSProp, Adam).

Ingen kommentarer:

Send en kommentar

Bemærk! Kun medlemmer af denne blog kan sende kommentarer.