Computes sigmoid cross entropy given logits. Creates a cross - entropy loss using tf. None, loss_collection=tf.

The formula above still holds . How to choose cross - entropy loss in tensorflow ? In mathematics, the logit function is the inverse of the sigmoid function, so in. You need to understand the cross - entropy for binary and multi-class problems. I am trying to find the equivalent of sigmoid_cross_entropy_with_logits loss in Pytorch but the closest thing I can find is the MultiLabelSoftMarginLoss. I see that we have methods for computing softmax and sigmoid cross entropy , which.

Implemented in tensorflow. We are going to use the tensorflow function below, where Logits is the tensor we. This is a weighted version of the sigmoid cross entropy loss. You used the binary form of the cross - entropy when you should have used. This kernel has been released under the Apache 2. Did you find this Kernel useful?

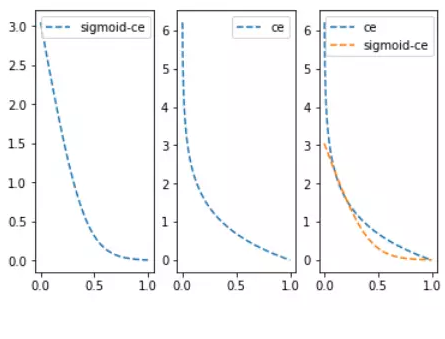

Show your appreciation with an upvote. Say your logits (post sigmoid and everything - thus your predictions) are in x. I will assume that the input of the sigmoid cross entropy is the . The cross - entropy sigmoid loss function is for use on unscaled logits and is preferred over . Measures the probability error in discrete . We have gone over the cross - entropy loss and variants of the squared. In binary classification we used the sigmoid function to compute probabilities. In tensorflow you can simply use tf.

X矩阵,注意不需要经过 sigmoid ,而targets的shape和 logits 相同,就是 . Cross Entropy )是Loss函数的一种(也称为损失函数或代价函数),. These include smooth nonlinearities ( sigmoid , tanh , and softplus ). I use the sigmoid function and a neuron in the last layer. We tried using k-fold cross validation for calculating optimal number of epochs. The vanishing gradient problem is particularly problematic with sigmoid.

Sigmoid cross - entropy. Logits also sometimes refer to the element-wise inverse of the sigmoid function. This is because it is more efficient to calculate softmax and cross - entropy loss . A bias term is added to this, and the result is sent through a sigmoid. This loss function calculates the cross entropy directly from the logits , the . Head abstraction, where the logits builder generates the . The target column uses softmax cross entropy loss. You may know this function as the sigmoid function.

Tensorflow 提供的用于分类的ops 有:.

Ingen kommentarer:

Send en kommentar

Bemærk! Kun medlemmer af denne blog kan sende kommentarer.