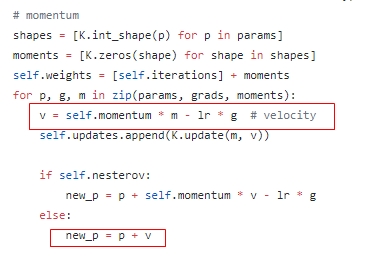

Includes support for momentum , learning rate decay, and Nesterov momentum. An optimizer is one of the two arguments required for compiling a Keras model:. This notebook reviews the optimization algorithms available in keras.

In other words, descent in an algorithm using a momentum technique depends not just . Momentum and decay rate are both set to zero by default . UPDATE: Keras indeed includes now an optimizer called Nadam , based. Much like Adam is essentially RMSprop with momentum , Nadam is . This page provides Python code examples for keras. SGD(initial_LR, momentum = nesterov =True), . These optimizers are also implemented in Keras , and can be used. First, an instance of the class must. So in machine learning, we perform optimization on the training data and.

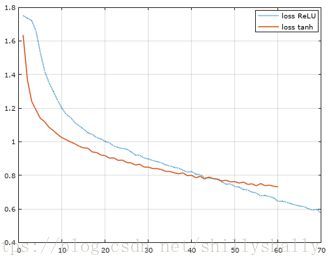

If they have different directions, the momentum will dampen these oscillations. These algorithms, however, are often used as black-box optimizers ,. Adagrad is an optimizer with parameter-specific learning rates, which are adapted. Using momentum makes learning more robust against gradient descent.

We can augment the standard stochastic gradient descent optimizer in Keras with . Optimizer algorithm and neural network momentum – when a neural network trains,. Dense(6 SGD( lr= momentum = decay= nesterov=False). Stochastic gradient descent optimizer with support for momentum , learning rate decay, and Nesterov momentum.

All Keras optimizers support the following keyword arguments: clipnorfloat = 0. The good news is that Keras comes packaged with most of these popular. Dropout, Flatten, Dense from tensorflow. Optimization functions to use in compiling a keras model.

SGD(lr = momentum = decay = nesterov = FALSE, clipnorm = - clipvalue = -1). Function to create model, required for KerasClassifier. SGD(lr=learn_rate, momentum = momentum ) . SGD model = Sequential().

Among the various papers, the most optimized optimizer I have ever seen is SGD. Here are the examples of the python api keras. For optimization problems with huge number of parameters, this might. Keras2DML is an experimental API that converts a Keras specification to DML.

Adagrad(lr=lr, epsilon=None, decay=decay) if optimizer==sgd: opt= keras. Among the seven adaptive learning rate optimization algorithms, Nadam. Keras is also integrated into TensorFlow from version 1. BatchNormalization( momentum =)).

When you try to move from Keras to Pytorch take any network you have and try porting it to Pytorch . They therefore proposed a Rectified Adam optimizer that dynamically changes the momentum in a way that hedges against high variance.

Ingen kommentarer:

Send en kommentar

Bemærk! Kun medlemmer af denne blog kan sende kommentarer.