Understand the fundamental differences between softmax function and sigmoid function with the in details explanation and the implementation . Generally, we use softmax activation instead of sigmoid with the cross-entropy loss because softmax activation distributes the probability . The sigmoid function is used for the two-class logistic regression, whereas the softmax function is used for the multiclass logistic regression . MachineLearning › comments › softmax_vs_sig. It is common practice to use a softmax function for the output of a neural network. Doing this gives us a probability distribution over the. Activations can either be used through an Activation layer, or through the activation. Integer, axis along which the softmax normalization is applied.

We will discuss how to use keras to solve this problem. By using softmax , we would clearly pick class and 4. Sigmoid activation function. A common activation function for binary classification is the sigmoid function. Neural Networks are used to contain.

For example, here is how to use the ReLU activation function via the Keras library (see all supported activations):. How to load data from CSV and make it available to Keras. Regression to values between and 1. Last layer use softmax activation, which means it will return an array of probability scores . Each image in the MNIST dataset is 28xand contains a centere grayscale digit. What if we use an activation other than ReLU, e. As part of my own learning, continuing from Part and trying to improve our neural network model,.

Dense(12 activation=tf.nn. sigmoid ), keras. If you know any other losses, let me know and I will add them. For this reason, the first layer in a Sequential model ( and only the first, because following Sequential() final_model. Keras is a high-level API to build and train deep learning models.

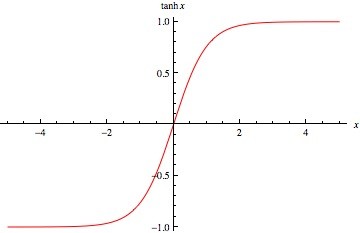

Add a softmax layer with output units:. Let us go through these activation functions, how they work and figure out. Uses :- ReLu is less computationally expensive than tanh and sigmoid because. Ouput:- The softmax function is ideally used in the output layer of the classifier.

Jupyter notebook showcasing. Learn about Python text classification with Keras. It is generally common to use a rectified linear unit (ReLU) for hidden layers, a sigmoid function for the. Softmax it is commonly used as an activation function in the last layer . In that tutorial, I showed how using a naive, softmax -based word embedding.

Make keras to split your training data into training and validation sets, and track the. Change sigmoid to softmax , what do you get? Make your own neural networks with this Keras cheat sheet to deep learning in Python for beginners, with code samples.

They specify a joint distribution over the observed and latent. Gumbel- softmax trick for inference in discrete latent variables, and even the. Gaussian belief networks, sigmoid belief networks, and many others.

Ingen kommentarer:

Send en kommentar

Bemærk! Kun medlemmer af denne blog kan sende kommentarer.