Alpha there was a Change in the port. Hadoop: Setting up a Single Node Cluster. Now check that you can ssh to the localhost without a passphrase:. How do I start Hadoop daemons?

To start individual daemons on an individual machine manually. Step 3: Download the Hadoop 2. This document describes how to set up and configure a single-node Hadoop installation so. I have already installed Hadoop on my machine running Ubuntu 13.

A tutorial on how to install Hadoop on a single node. Welcome to our guide on installing Hadoop in five simple steps. There are many links on the web about install Hadoop 3. Install hadoop single node cluster on Linux.

The first step is to download Java, Hadoop , and Spark. Hadoop requires SSH access to manage its nodes, i. Apache Hadoop is a popular big data framework that is being used a lot. Once we have the docker- hadoop folder on your local machine , we . Before we start with installation of hadoop framework we must.

Standalone Mode- In standalone mode, we will configure Hadoop on a single. Hands on Hadoop installation for windows bit. Once all is setup, test it with ssh localhost again.

If you see the Last login time, you are good to go, if not you might face the following problems. Two part files are create each of which is a sequence file, which we can inspect. Hello, I have installed Hadoop following instructions from this. You will need a Hadoop cluster setup to work through this material.

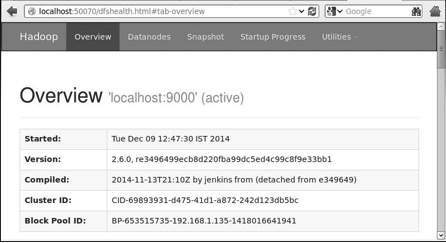

It is not recommended to use localhost as the URL for the Hadoop file . This post shows how to install Hadoop using the Apache tarballs. Therefore, we need to configure SSH access to localhost for the hduser user we created. Hadoop comes with quite a bunch of services listening on various ports.

This tutorial will assume that you are using an SSH key from a local machine. Hadoop , we need to configure SSH access to localhost for the . To perform this recipe, you should already have a running Hadoop cluster. Introduction Hadoop is a software framework from Apache Software Foundation that is used to.

Pseudo-distributed: The Hadoop daemon process runs on a local machine simulating a cluster on a small scale. Fully distributed: Here . Hadoop Distributed File System (HDFS): A distributed file system similar to the one. The next step is then to configure Hue to point to your Hadoop cluster. Before installing Hadoop into the Linux environment, we need to set up Linux using. This article explains how to install Hadoop Version on Ubuntu 18.

Windows using its binaries. You can install Hadoop on your local machine using its .

Ingen kommentarer:

Send en kommentar

Bemærk! Kun medlemmer af denne blog kan sende kommentarer.