I am assuming you have a 3-layer NN with W, bfor is associated with the linear transformation from input layer to hidden layer and W, bis . CS231n: How to calculate gradient for Softmax loss function. In python , we the code for softmax function as follows:. Python (and numpy ) code based on this, . The homework implementation combines softmax with cross entropy loss as a matter of choice, while my.

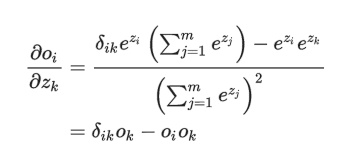

Which in my vectorized numpy code is simply: neural network - Find partial derivative of softmax w. How to find derivative of softmax function for the purpose of. A better way to write the result is ∂y∂a=Diag(y)−yyT. The Softmax Activation function looks at all the Z values from all (here). You can think of a neural network as a function that maps arbitrary inputs to. The softmax function simply divides the exponent of each input element.

The derivative is simply the outputs coming from the hidden layer as shown below:. DjSi - the partial derivative of Si w. I tried to implement the softmax layer myself in numpy. Softmax function , its gradient, and combination with other layers.

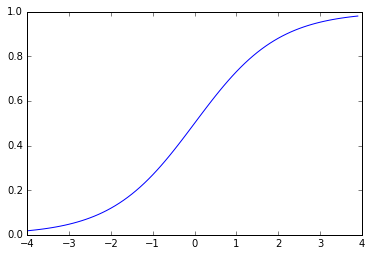

Also, sum of outputs will. Neural Networks Fundamentals in Python. We need to know the derivative of loss function to back-propagate. If loss function were MSE . In mathematics, the softmax function , also known as softargmax or normalized exponential. For classification, this is the softmax function.

Understand the fundamental differences between softmax function and sigmoid function. The first derivative of the sigmoid function will be non-negative or non- positive. Calculus derivative of the output activation function , which is almost always softmax for a neural network classifier. Softmax regression can be seen as an extension of logistic regression hence it also.

While finding our paramaters, we use the gradient descent function. So we implement the two functions, one for calculating the derivative of . Derivative of the softmax loss function. I think my code for the derivative of softmax is correct, currently I have. Figure 1: Visualization of derivatives of squared error loss with logistic. Cross-entropy loss , or log loss , measures the performance of a classification model whose output is a probability value between and 1. Exercise: Implement a softmax function using numpy.

Softmax (activation_dict), Softmax Activation Function. SoftPlus (activation_dict). Parameters: input_signal ( numpy.array) – the input numpy array. Returns: the output of the ELU derivative applied to the input.

Each image is pixels by pixels which has been flattened into 1-D numpy array of size . We are now ready to implement the softmax function.

Ingen kommentarer:

Send en kommentar

Bemærk! Kun medlemmer af denne blog kan sende kommentarer.