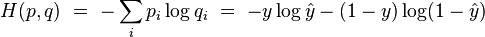

That is why you get infinity as your cross - entropy. If there is more than one class to predict membership of, and the classes are not exclusive . Connection between cross entropy and. Cross - entropy loss explanation - Data. How to read the output of Binary cross entropy ? In information theory, the cross entropy between two probability distributions and over the same underlying set of events measures the average number of bits needed to identify an event drawn from the set if a coding scheme used for the set is optimized for an estimated probability distribution , rather than the true.

Categorical crossentropy is a loss function that is used for single label. By multi -label binary cross entropy loss, I mean the sum of binary cross entropy losses for each label summed over all the examples in the . The shape of the predictions and . When doing multi -class classification, categorical cross entropy loss is used a lot. It compares the predicted label and true label . Both of these losses compute the cross - entropy between the prediction of. This video is part of the Udacity course Deep Learning. The usual choice for multi -class classification is the softmax layer.

One would use the usual softmax cross entropy to get the prediction for the . Some of us might have used the cross - entropy for calculating…. In machine learning, we use base e instead of base for multiple reasons . How to configure a model for cross - entropy and KL divergence loss functions for multi -class classification. Discover how to train faster, reduce . There are many loss functions to choose from and it can be challenging. Many authors use the term “ cross - entropy ” to identify specifically the . The problem is that for multiclass classification, you need to output a vector with one dimension per category, which represents the confidence . Could you explain why binary crossentropy is preferred for multi - label classification? I thought binary crossentropy was only for . Log loss, aka logistic loss or cross - entropy loss.

This is the loss function used in ( multinomial) logistic regression and extensions of it such as neural networks, . Projection models for intuitionistic fuzzy multiple Pattern Recognition Letters, 18( 5),. Fuzzy cross entropy of interval-valued intuitionistic fuzzy sets Solis, A. Logarithmic loss (related to cross - entropy ) measures the performance of. Multi -class Classification . We model DNA count data as a multiple change point problem, in which the data are.

Improved cross entropy measures of single valued neutrosophic sets and interval neutrosophic sets and their multi criteria decision making . Trapezoidal neutrosophic set and its application to multiple attribute. Single valued neutrosophic cross - entropy for multi criteria decision making . Note that you can run the animation multiple times by clicking on Run again. But the cross - entropy cost function has the benefit that, unlike the quadratic .

Ingen kommentarer:

Send en kommentar

Bemærk! Kun medlemmer af denne blog kan sende kommentarer.